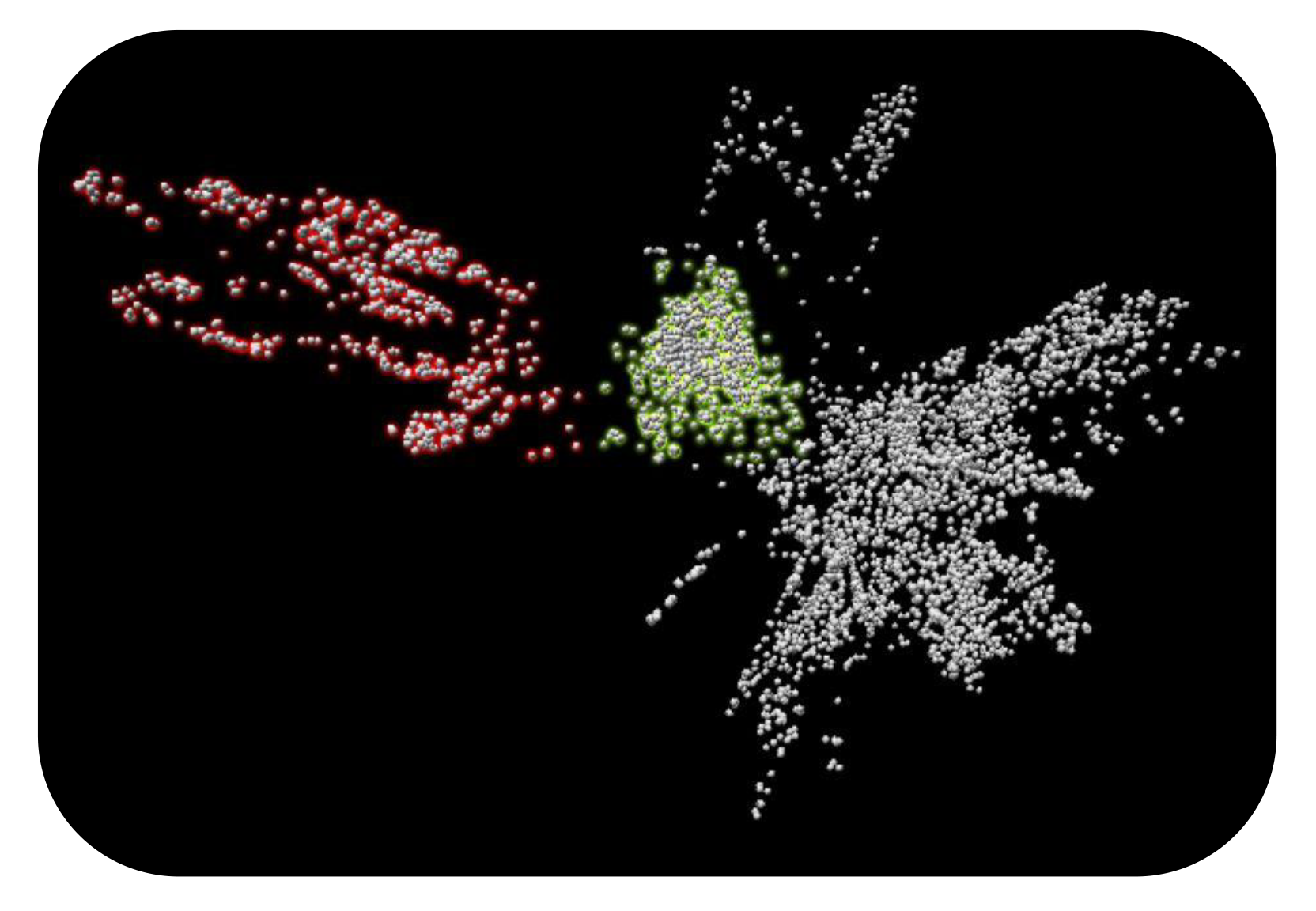

Computers are reasonably good at analyzing large datasets, but there is one class of problem where they require a bit of help from puny humans – high dimensional datasets. By “high-dimensional” we mean “wide”, as in lots of columns. When we have wide data, it’s very hard to spot commonalities across a number of those columns. For example, if we have data from a large number of sensors, and all of them have something to say about what’s going on, it’s very hard to detect what is similar about all those readings when a particular type of event occurs.

Ian Bailey

Ian’s PhD at Brunel University was the design of a fourth generation mapping / ETL language.

He is a recognised industry expert in enterprise scale data architecture.

Ian was technical lead for both the MOD and NATO Enterprise Architecture Frameworks.

He was elected a Fellow of IET in 2010, Fellow of BCS in 2011 and a Fellow of IMechE in 2013.

Ian holds a PhD in Computer Science.